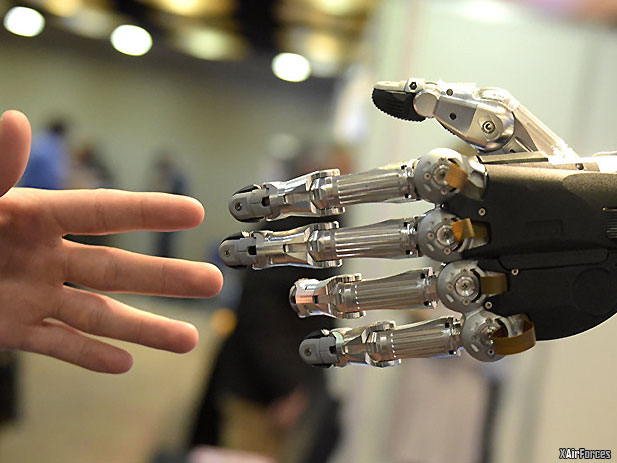

DARPA Teaches Robots Manners

The Defense Advanced Research Projects Agency has developed a general model "for what norms and normative networks are" and how they can be developed into interactive algorithms for training tomorrow’s robots to be more like us — at least, those of us who weren't born in a barn.

Researchers developed a “cognitive-computational model of human norms in a representation that can be coded into machines,” according to DARPA’s website. Further, a smart algorithm has been developed that “allows machines to learn norms in unfamiliar situations drawing on human data,” the weapons developing agency said. If smart robots are ever to become “trustworthy collaborators with human partners,” they will need to obey basic intuitive norms about human interaction.

SilentHawk Hybrid Electric Stealth Military Motorcycle / SilentHawk: DARPA’s Stealth Dirt Bike Speeds Closer to Reality (© Logos Technologies)

According to the Pentagon’s account, they’ve completed a significant — if mostly confidential — breakthrough in philosophy: training robots to recognize value judgments like good and bad, right and wrong. These human faculties are critical for until-now strictly human tasks like planning, setting goals and organizing, and will be incorporated, at least on a basic level, into the robots of the future.

Researchers sought to understand "and formalize human normative systems and how they guide human behavior, so that we can set guidelines for how to design next-generation AI machines that are able to help and interact effectively with humans," DARPA program manager Reza Ghanadan said in a release.

The research was led by Bertram Malle of Brown University in Rhode Island, in cooperation with partners at Tufts University outside of Boston.

Norms are sometimes taught to us as schoolchildren, but humans can also pick them up implicitly and they eventually fall into the category of common sense. Social cues such as silencing a cell phone in a library and knowing the right point in a conversation or exchange to say thank you seem natural, but they are learned behaviors. DARPA aims to teach these behaviors to robots, too.

Nevertheless, significant hurdles remain in the task to humanize tomorrow’s robots.

Humans have a range of modalities for learning and applying normal behavior, while robots have yet to achieve a point in development where they have similar capabilities to learn basic norms. This complicates the task, says Ghanadan, because there are a lot of uncertainties in human modalities like perception, inference and instruction. The "inherent uncertainty in these kinds of human data inputs make machine learning of human norms extremely difficult," he said.

The goal is to make robots that are not only useful, but socially and ethically sensible, too. This comprises a giant leap beyond guiding people through tax filings or detecting fake news, as some smart algorithms are currently programmed to do.

Unmanned U.S. Predator drone. (File) / Catch and Release: Darpa’s Reusable Gremlin Drones to Lower Costs (© AP Photo/ Kirsty Wigglesworth) Unmanned U.S. Predator drone. (File) / Catch and Release: Darpa’s Reusable Gremlin Drones to Lower Costs (© AP Photo/ Kirsty Wigglesworth)

"If we’re going to get along as closely with future robots, driverless cars, and virtual digital assistants in our phones and homes as we envision doing so today, then those assistants are going to have to obey the same norms we do," Ghanadan said.

Ghanadan doesn’t address, however, questions about how robots might react to changing norms in human life, nor how robots themselves might play a role in how societal norms change.

Sputnik previously reported tech firms have enlisted virtual and augmented reality scenarios to condition robots to complete household and industrial tasks. The hope is that bots will sweep our floors, water our plants or put together car frames in VR scenarios, and thus “learn” how to complete these tasks.

Source: SPUTNIK By Grant Ferowich / Military & Intelligence News - sputniknews.com - 21 June 201

Photo: DARPA Teaches Robots Manner (Photo by © AP Photo/ GERARD JULIEN)

(21.06.2017)

|